A Chad for everyone

you know you can run your own LLM right?

If you have an Apple Silicon computer (M-processors), you can simply download ollama, have ollama download llama3 and boom you're in business. All local.

This might need a bit of unpacking.

So, you've heard of LLMs. Large Language Models. ChatGPT has given us access to a few of these. At first there was 3.5, then 4 and now the latest is 4o. All of these are models. Models are trained, first, and then models are run.

Training a model takes ungodly amounts of compute power. In fact it takes GPU power more than CPU power. It takes beyond GPU power as nVidia produces H100s and other such enormous purpose-made cores. (Footnote: Bitcoin energy consumption is to AI what steam power is to a ramjet engine)

But running a model doesn't take as much power as it takes to train one. Your macbook can run a model.

Well, it depends, some models are more beefy than others. But just because a model needs performance doesn't mean it's particularly well trained.

In April, Meta released Llama 3 which is efficient, fast, and capable. They released it in the sense that you can download and run it. It's a few GBs in size. All you need to do is have some kind of application to run it. ollama is one of these. It's incredibly simple to install and run.

If you have a broadband connection, you can be running better-than-GPT3.5 on your machine in a few minutes. At $0 cost.

Feel free to download other models and compare responsiveness. Llama3 really struck a nice middle ground for me because the speed at which it answers is human-readable. But bigger models will behave very slowly on my laptop and it's like a word every 10 seconds. Fun to witness.

It gets better.

You might have heard of Hugging Face. The reason I know of them, now, is because of the Hugging Face Hub where models are shared and collaborated on. Think of github with forks and branches but on models. Meaning you can take a model that's been released and retrain it on material that pertains to you. Or... you can remove some of the training boundaries that it had. That's where Dolphin comes in. In May 2023 Eric Hartford explained how and why he uncensored models.

So, it's not quite GPT-4o but you can have, on your machine, a model that will not bend to the rules of another maker. It will just tell you the knowledge you're seeking. Let your imagination run for a bit.

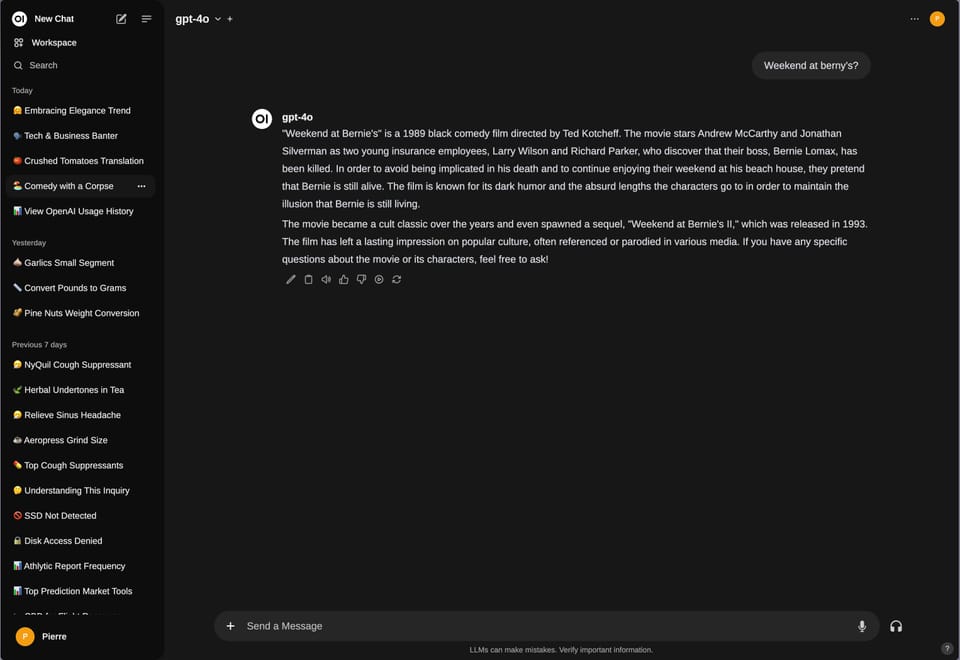

Okay, and finally, there is the interface layer. When you use ChatGPT you have a nice-ish web interface for your chats. Anthropic does this with Claude as well. Ollama doesn't have this out of the box but Open-WebUI has been iterating at the speed of lightning and, I would argue, its interface is now better than ChatGPT. It'll save your chats, it'll make a nice little summary with emoji for all of them, it'll support multi-modal, voice, pictures, all that and 1,000 other features that I haven't understood yet.

Not only this but Open-WebUI will let you connect to many models. it's just a front-end. No matter where your models are running. And who's running them. Maybe you have ollama running on an old gaming computer in your basement. Just connect Open-WebUI to it and you now have your own ~ChatGPT, essentially. But if you have signed up for the OpenAI API then you can also simply enter an API key in Open-WebUI and all of a sudden, everyone who has an account on your Open-WebUI instance can make GPT-4o requests. Or Claude. And you pay by the drip.

It's like discovering you have a cave in your backyard and within it are kept many genies of varying types.

[UPDATE:] And for those reading on the web, check out this video. A bit melodramatic but many shocking moments: